In this tutorial, I will attempt to demonstrate how to use

the k-Means clustering method in RapidMiner. The dataset I am using is

contained in the Zip_Jobs folder (contains multiple files) used for our March 5th

Big Data lecture.

- Save the files you want to use in a folder on your computer.

- Open RapidMiner and click “New Process”. On the left hand pane of your screen, there should be a tab that says "Operators"- this is where you can search and find all of the operators for RapidMiner and its extensions. By searching the Operators tab for "process documents", you should get an output like this (you can double click on the images below to enlarge them):

You should see several Process Documents operators,

but the one that I will use for this tutorial is the “Process Documents from

Files” operator because it allows you to generate word vectors stored in

multiple files. Drag this operator into the Main Process frame.

- Click “Edit List” beside the “text directories” label in the right-hand pane in order to choose the files that you wish to run the clustering algorithm on.

You can choose whatever name you wish to name

your directory.

Click the folder icon to select

the folder that contains your data files. Click “Apply Changes”.

- Double click the “Process Documents from Files” operator to get inside the operator. This is where you will link operators together to take the (in my case) html documents and split them down into their word components (please note that you can run the K-Means Clustering algorithm with a different type of file). As highlighted in my previous tutorial, there are several operators designed specifically to break down text documents. Before you get to that point, you need to strip the html code out of the documents in order to get to their word components. Insert the “Extract Content” operator into the Main Process frame by searching for it in the Operators tab.

- The next thing that you would want to do to your files is to tokenize it. Tokenization creates a "bag of words" that are contained in your documents. Search for the "Tokenize" operator and drag it into the "Process Documents from Files" process after the “Extract Content” operator. The only other operator that is necessary to include for appropriate clustering for documents is the “Transform Cases” operator; without this, documents that have the same words in different cases would not be considered as more distant (less similar) documents. You should get a process similar to this:

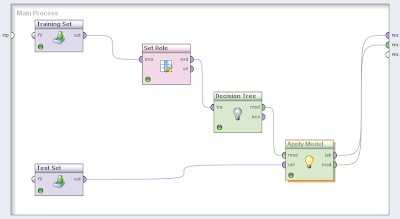

- Now for the clustering! Click out of the “Process Documents from Files” process. Search for “Clustering” in the Operators Tab:

As you can

see, there are several clustering operators and most of them work about the

same. For this tutorial, I chose to demonstrate K-Means clustering since that

is the clustering type that we have discussed most in class. In RapidMiner, you

have the option to choose three different variants of the K-Means clustering

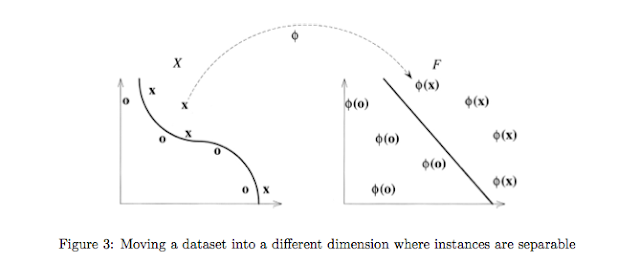

operator. The first one is the standard K-Means, in which similarity between

objects is based on a measure of the distance between them. The K-Means (Kernel)

operator uses kernels to estimate the distance between objects and clusters. The

k-Means (fast) operator uses the Triangle Inequality to accelerate the k-Means

algorithm. For this example, use the standard k-Means algorithm by dragging

into the Main Process frame after the “Process Documents from Files” operator. I

set the k value equal to 4 (since I have 19 files, this should give me roughly

5 files in each cluster) and max runs to about 20.

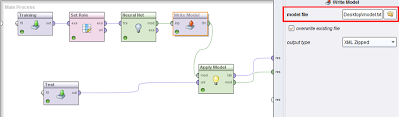

- Connect the output nodes from the “Clustering” operator to the res nodes of the Main Process frame. Click the “Play” button at the top to run. Your ExampleSet output should look like this:

If you do not get this output, make sure that all of

your nodes are connected correctly and also to the right type. Some errors are because your output at one node

does not match the type expected at the input of the next node of an operator.

If you are still having trouble, please comment or check out the Rapid-i support forum.