The format of this tutorial is done such that it gives written instructions followed by a picture for that step.

Step 1.

Start by logging into AWS. Once you have done that, you will see this page. Click "EC2" virtual services in the cloud.

Step 2.

Click on "Launch Instance"

Step 3. The next page will say "launch with classic wizard." Just click "Continue."

Step 4. The next page will be titled "Request instances wizard." Just click "community AMIs tab".

Step 5. Next to the Viewing all images drop down field, type in"1000HumanGenomes."

Step 6. Once the AMIs have popped up, click select next to the first one.

Step 7. After that you will be taken to the instance types selection. Click the drop down arrow and select the type of instance you would like to use. I chose "M1 Large."

Step 8. Next you will be prompted to create a password in order to access your AMI. Type your password in the text field shown in the picture.

Step 9. Next you will be prompted with a "Storage device configuration" menu. Just click continue.

Step 10. It will ask you if you want to tag your instance. You can just click continue.

Step 11.

Next you will be prompted to enter your personal key pair. Enter your keypair into the text field marked in the photo.

Step 12. Next, you will be prompted to enter your security group. Just select the default one.

Step 13. In Step 13 you will be shown all the specifics you requested in the previous steps. Click Launch if they are all satisfactory.

Step 14. After that, you will be told that your instance is being launched. Click "close."

Step 15. In your instances section, check the Status Checks section. After a while, it should say "checks past."

Step 16. After that, you are done. If you have a piece of software called Linux bio cloud on a computer with a Ubuntu Linux operating system, you should be able to work with the data!

Industrial engineering students at Auburn University blog about big data. War Eagle!!

Showing posts with label Tutorial. Show all posts

Showing posts with label Tutorial. Show all posts

Sunday, April 28, 2013

Tuesday, April 23, 2013

OutWit Hub: Web-scraping made easy

I read a blog earlier this term on web-scraping and decided to check it out. I started with the suggested software, and quickly realized that there are only a few really good tools available for web-scraping and that are supported by Max OS. So, after reading a few reviews, I landed on OutWit Hub.

OutWit Hub has 2 versions: Basic and Pro. The difference is in available tools. In basic, the "words" tools isn't available. This aspect allows you to see the frequency of any word as it occurs on the page you are currently viewing. Several of the scraping tools are offline as well. I've upgraded to Pro, it's only $60 per year and I was curious to see what else it can do.

I'm not a computer scientist, by a long shot, but I have a general grasp on coding and how computers operate. For this reason, I really like OutWit Hub. The tutorials on this site are incredible. They walk you through examples and you can interact with the UI while the tutorial is going. Also, a lot of the tools are pretty intuitive to use. If you're not sold on getting the Pro version, I'd encourage you to visit their website and download the free version just to check out the tutorials. They're really great.

I've used the site for several examples just to test. I needed to get all of the emails off of an organization's website, so instead of copy/pasting everything and praying for the best, I used the "email" feature on OutWit and all of the names and emails of every member on the page populated an exportable table. #boom

Then, I wanted to see if it could be harnessed for Twitter and Facebook. So, using the source-code approach to scraping, I was able to extract text from the loaded parts of my Twitter and Facebook feeds. The problems I encountered were: Not knowing enough about the coding to make the scraper dynamic enough to peruse through unloaded pages, and not knowing how to automate and build a larger dataset (i.e. continuously run the scraper over a set amount of time by continuously reloading the page and harvesting the data. It's possible, I just didn't figure it out).

So, I've videoed a tutorial on how to use OutWit Hub Pro's scraper feature to scrape the loaded part of your Facebook news feed. Below are the written instructions and the video at the bottom gives you the visual.

Essentially, you will:

1.) Launch OutWit Hub (presuming you've downloaded and upgraded to Pro).

2.) Login to your profile on Facebook.

3.) Take note of whatever text you want to capture as a reference point when you go to look in the code. This is assuming you don't know how to read html. For example, if the first person on your news feed says: "Hey check out this video!", then take note of their statement "Hey check out this video!"

4.) Click the "scrapers" item on the left side of the screen.

5.) In the search window, type in the text "Hey check out this video" and observe the indicators in the code that mark the beginning and end of that text.

5.) In the window below the code, click the "New" button.

6.) Type in a name for the scraper

7.) Click the checkbox in row 1 of the window.

8.) Enter a title/description for the information you're collecting in the first column. Using the same example: "Stuff friends say on FB" or "Text". It really only matters if you're going to be extracting other data from the same page and want to keep it separate.

9.) Type in the html code that you indicated as the beginning to the data that you want to extract under the "Marker Before" column.

10.) Repeat step 9 for the next column using the html code that you indicated as the end to the data.

11.) Click "Execute".

12.) Your data is now available for export in several templates - CSV, Excel, SQL, HTML, TXT

Here is a Youtube video example of me using it to extract and display comments made by my Facebook friends that appeared on my news feed.

OutWit Hub has 2 versions: Basic and Pro. The difference is in available tools. In basic, the "words" tools isn't available. This aspect allows you to see the frequency of any word as it occurs on the page you are currently viewing. Several of the scraping tools are offline as well. I've upgraded to Pro, it's only $60 per year and I was curious to see what else it can do.

I'm not a computer scientist, by a long shot, but I have a general grasp on coding and how computers operate. For this reason, I really like OutWit Hub. The tutorials on this site are incredible. They walk you through examples and you can interact with the UI while the tutorial is going. Also, a lot of the tools are pretty intuitive to use. If you're not sold on getting the Pro version, I'd encourage you to visit their website and download the free version just to check out the tutorials. They're really great.

I've used the site for several examples just to test. I needed to get all of the emails off of an organization's website, so instead of copy/pasting everything and praying for the best, I used the "email" feature on OutWit and all of the names and emails of every member on the page populated an exportable table. #boom

Then, I wanted to see if it could be harnessed for Twitter and Facebook. So, using the source-code approach to scraping, I was able to extract text from the loaded parts of my Twitter and Facebook feeds. The problems I encountered were: Not knowing enough about the coding to make the scraper dynamic enough to peruse through unloaded pages, and not knowing how to automate and build a larger dataset (i.e. continuously run the scraper over a set amount of time by continuously reloading the page and harvesting the data. It's possible, I just didn't figure it out).

So, I've videoed a tutorial on how to use OutWit Hub Pro's scraper feature to scrape the loaded part of your Facebook news feed. Below are the written instructions and the video at the bottom gives you the visual.

Essentially, you will:

1.) Launch OutWit Hub (presuming you've downloaded and upgraded to Pro).

2.) Login to your profile on Facebook.

3.) Take note of whatever text you want to capture as a reference point when you go to look in the code. This is assuming you don't know how to read html. For example, if the first person on your news feed says: "Hey check out this video!", then take note of their statement "Hey check out this video!"

4.) Click the "scrapers" item on the left side of the screen.

5.) In the search window, type in the text "Hey check out this video" and observe the indicators in the code that mark the beginning and end of that text.

5.) In the window below the code, click the "New" button.

6.) Type in a name for the scraper

7.) Click the checkbox in row 1 of the window.

8.) Enter a title/description for the information you're collecting in the first column. Using the same example: "Stuff friends say on FB" or "Text". It really only matters if you're going to be extracting other data from the same page and want to keep it separate.

9.) Type in the html code that you indicated as the beginning to the data that you want to extract under the "Marker Before" column.

10.) Repeat step 9 for the next column using the html code that you indicated as the end to the data.

11.) Click "Execute".

12.) Your data is now available for export in several templates - CSV, Excel, SQL, HTML, TXT

Here is a Youtube video example of me using it to extract and display comments made by my Facebook friends that appeared on my news feed.

Thursday, April 18, 2013

Introducing Global Terrorism Data

Global Terrorism Database is one of the data source introduced in the first class. This database (GTD) has been managed by University of Maryland. It has records from 1970 through 2011 and keeps on piling up recent data related to terrorism activities. It is provided with csv file format, which is plain text, and when transporting it to Excel, the data set will be shown like below.

Each record consists of 98 attributes including ID, time, location, target, weapon, and so on. It is basically a time-series data set. Considering this property, GTD site also provides a graphical interface with this data set, called GTD Data Rivers which generates and shows a diagram like a big flow of water.

The Combo box at the top of the screen shows the list of attributes users can choose. The main part of the screen demonstrates the change of terrorism activities corresponding to the choice users made over time. Adjusting the red bar at the left bottom, users can narrow down the focus they want to see. The right bottom part of the screen illustrates the amount of each element in the Data Rivers above.

Reference: http://www.start.umd.edu/gtd/

Thursday, April 4, 2013

Text mining techniques used for hiring process

This tutorial is based on resume sorting and clustering

using text mining techniques. The crucial function of every company is hiring

new individuals. The pool of resumes a company receives during recruitment are

way higher than number of person assigned. Text mining technique is required in

order to sort and filter keywords such as Internships, relevant skills,

experiences, etc. Based on those keywords, various categories can be defined

and resumes can be categorized ultimately leading to selection of better

individuals. The video posted below shows different techniques used to filter

resumes.

Tuesday, April 2, 2013

Tutorial: Python 3.3.0

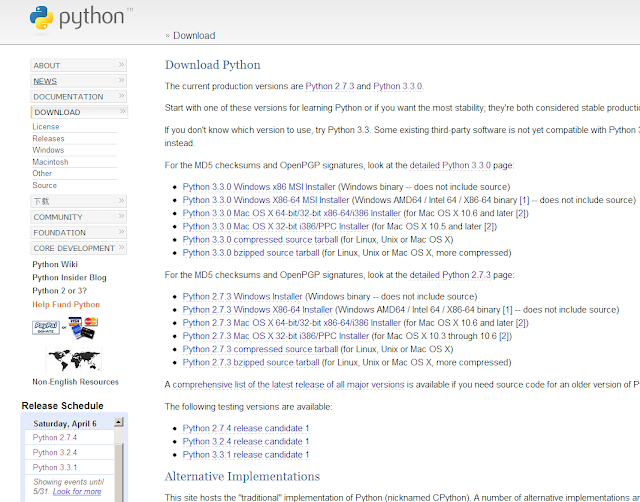

If we need use Amazon EC2 to do the Big Data project, we have to know the Python programming language. The example we used in the class had been explained by Xinyu. Python runs on Windows, Linux/Unix, Max OS X, and has been ported to the Java and .Net virtual machines.

Python is free to use. Today I want to show you how to install Python software and the detailed tutorial.

1. Go to www.python.org

2. Choose the right installer for your OS in the download page. I choose Python 3.3.0 Windows X86-64.

3. Follow the steps to install it.

4. Choose IDLE in your programs to start programming.

5. If you want to program in files. You can choose file >> New window. And after the programming, you can click Run >>> Run the Module.

Now you have know how to install and use python. Next I want to show you some detailed tutorial for programming in Python. This is a YouTube playlist. The playlist address is https://www.youtube.com/watch?v=4Mf0h3HphEA&list=ECEA1FEF17E1E5C0DA You might need 10 hrs to learn that. It is very helpful.

Sunday, March 31, 2013

Crunching Big Data with Google Big Query

Ryan Boyed who is the developer advocate at Google and

focuses on Google Big Query presents first part of this video, and in the five

years at Google, he helped build the Google Apps ISV ecosystem. Tomer Shiran

who is the director of the product management team at MapR and is the founding

member of Apache Drill presents the second part of this video.

The developers have to face different kinds of data and a

large number of data, without good analyzing software and useful analyzing

methods, they have to use lots of time to collect a big amounts of data and

then throw some “invaluable” data, but most of time, the “invaluable” data has

their own potential value. Google has a good knowledge of Big Data with the

situation that every minute there are countless of users using Google’s

products such as Youtube, Google Search, Google+ or Gmail. With the big amounts

of data, Google begins to use APIs or other technologies to make the developers

focus on their fields. Google Big Query also known as Dremel is a Google

internal technology for Big Data analysis, and Apache Drill as Wikipedia says “Apache

Drill is an open-source software framework that supports data-intensive

distributed applications for interactive analysis of large-scale datasets.

Drill is the open source version of Google's Dremel system which is available

as an infrastructure service called Google BigQuery. One explicitly stated

design goal is that Drill is able to scale to 10,000 servers or more and to be able

to process petabytes of data and trillions of records in seconds. Currently,

Drill is incubating at Apache.” Apache Drill can make users query terabytes of

data in seconds and can support Protocol Buffers, Avro and JSON data formats,

with using data sources, it can use Hadoop and HBase. MapR Technologies is the

open enterprise-grade distribution for Hadoop, which is easy, dependable and

fast to use, and is the open source with standards-based extensions. MapR is

deployed at one thousand’s of companies from small Internet startups to the

world’s largest enterprises. MapR customers analyze massive amounts of data

including hundreds of billions of events daily, data from ninety percent of

world’s Internet population monthly and data from one trillion dollars in

retail purchases annually. MapR has partnered with Google to provide Hadoop on

Google computer engine. Drill execution engine has two layers which are

operator layer and execution layer, the operator layer is serialization-aware

to process individual records and execution layer is not serialization-aware to

process batches of records to be responsible for communication, dependencies

and fault tolerance. MapR can provide the best Big Data processing capabilities

and is the leading Hadoop innovator.

Sources:

http://en.wikipedia.org/wiki/Apache_Drill

Friday, March 29, 2013

k-Means Clustering Tutorial in RapidMiner

In this tutorial, I will attempt to demonstrate how to use

the k-Means clustering method in RapidMiner. The dataset I am using is

contained in the Zip_Jobs folder (contains multiple files) used for our March 5th

Big Data lecture.

- Save the files you want to use in a folder on your computer.

- Open RapidMiner and click “New Process”. On the left hand pane of your screen, there should be a tab that says "Operators"- this is where you can search and find all of the operators for RapidMiner and its extensions. By searching the Operators tab for "process documents", you should get an output like this (you can double click on the images below to enlarge them):

You should see several Process Documents operators,

but the one that I will use for this tutorial is the “Process Documents from

Files” operator because it allows you to generate word vectors stored in

multiple files. Drag this operator into the Main Process frame.

- Click “Edit List” beside the “text directories” label in the right-hand pane in order to choose the files that you wish to run the clustering algorithm on.

You can choose whatever name you wish to name

your directory.

Click the folder icon to select

the folder that contains your data files. Click “Apply Changes”.

- Double click the “Process Documents from Files” operator to get inside the operator. This is where you will link operators together to take the (in my case) html documents and split them down into their word components (please note that you can run the K-Means Clustering algorithm with a different type of file). As highlighted in my previous tutorial, there are several operators designed specifically to break down text documents. Before you get to that point, you need to strip the html code out of the documents in order to get to their word components. Insert the “Extract Content” operator into the Main Process frame by searching for it in the Operators tab.

- The next thing that you would want to do to your files is to tokenize it. Tokenization creates a "bag of words" that are contained in your documents. Search for the "Tokenize" operator and drag it into the "Process Documents from Files" process after the “Extract Content” operator. The only other operator that is necessary to include for appropriate clustering for documents is the “Transform Cases” operator; without this, documents that have the same words in different cases would not be considered as more distant (less similar) documents. You should get a process similar to this:

- Now for the clustering! Click out of the “Process Documents from Files” process. Search for “Clustering” in the Operators Tab:

As you can

see, there are several clustering operators and most of them work about the

same. For this tutorial, I chose to demonstrate K-Means clustering since that

is the clustering type that we have discussed most in class. In RapidMiner, you

have the option to choose three different variants of the K-Means clustering

operator. The first one is the standard K-Means, in which similarity between

objects is based on a measure of the distance between them. The K-Means (Kernel)

operator uses kernels to estimate the distance between objects and clusters. The

k-Means (fast) operator uses the Triangle Inequality to accelerate the k-Means

algorithm. For this example, use the standard k-Means algorithm by dragging

into the Main Process frame after the “Process Documents from Files” operator. I

set the k value equal to 4 (since I have 19 files, this should give me roughly

5 files in each cluster) and max runs to about 20.

- Connect the output nodes from the “Clustering” operator to the res nodes of the Main Process frame. Click the “Play” button at the top to run. Your ExampleSet output should look like this:

If you do not get this output, make sure that all of

your nodes are connected correctly and also to the right type. Some errors are because your output at one node

does not match the type expected at the input of the next node of an operator.

If you are still having trouble, please comment or check out the Rapid-i support forum.

Thursday, March 28, 2013

Splunk as a Big Data Platform for Developers

Damien Dallimore who is the developer evangelist at Splunk

presents this video, and the presentation is about Splunk which is a Big Data

platform for developers. In this video, you will see the overview of the Splunk

platform, how to use Splunk, Splunk JAVA SDK, the conference integration Splunk

extensions, and some other JVM/JAVA related tools.

Splunk is an engine for machine data for aggregating,

collecting and correlating. In the same time, Splunk provides visibility,

reports and searches across IT systems and infrastructure, and it will not lock

you into a fixed schema. You can download Splunk and install it in five

minuetes and run on all modern platforms. In addition, Splunk has an open and

extensible architecture. It can index any machine data, such as capture events

from logs in real time, run scripts to gather system metrics and connect to

APIs and databases, listen to syslog, raw TCP/UDP and gather windows events,

universally indexes any data format so it doesn’t need adapters, stream in data

directly from you application code, and decode binary data and feed in. Splunk

can centralize data across the environment, firstly Splunk Universal Forwarder

sends data to Splunk Indexer from remote systems, secondly, it uses minimal

system resources, easy to install and deploy, finally, it delivers secure,

distributed, real-time universal data collection for tens of thousands of

endpoints. Splunk scales to TBs/day and thousands of users, automatic loads

balancing linearly scales indexing, and distributes search and MapReduce

linearly scales search and reporting. Splunk provides strong machine data

governance, it provides comprehensive controls for data security, retention and

integrity, and singles sign-on integration enables pass-through authentication

of user credentials. Splunk is an implementation of the Map Reduce algorithmic

approach and it is not Apache Hadoop MapReduce (MR) the product. Splunk is not

agnostic of its underlying data source and is optimal for time series based

data. Splunk is end-to-end integrated Big Data solution and is fine grained

protection of access and data using role based permissions. Splunk is data retention

and aging controls, when users use Splunk, they can submit “Map Reduce” jobs without

needing to know how to code a job. Splunk has four primary functions, firstly,

searching and reporting, secondly, indexing and search services, thirdly, local

and distributed management, finally, data collection and forwarding. The

developers could use Splunk to accelerate development and testing, to integrate

data from Splunk into your existing IT environment for operational visibility,

and to build custom solutions to deliver real-time business insights from Big

Data. In a conclusion, Spunk is an integrated, enterprise-ready Big Data

Platform.

Wednesday, March 20, 2013

Tutorial on creating a confusion matrix using Orange

Confusion Matrix

The following video shows a tutorial on how to create a confusion

matrix using Orange. I used the same dataset as used on an existing confusion

matrix example on Youtube, as this is only one available on Orange which

effectively generates a confusion matrix. The screen recording software is not the best as I had to record the video

and audio separately and this may have caused some lag. I tried at least 3-4 different kinds of recording tools but had compatibility issues with Windows 8.

This is a very useful tool and can be extensively

used in analytics. Hopefully the video is clear enough or I can write about

this explaining it step by step. I did the tutorial to visualize the results

and share with the class on how to use this feature in Orange as I learnt this

from various tutorials online.

It uses a dataset which categorizes 3 different types of flowers- iris setosa, iris versicolr and iris virginica in terms of sepal length and width and petal length and width. The confusion matrix shows how sometimes the system might wrongly categorize these flowers based on closely matching attributes even with a well categorized excel file.

Tuesday, March 19, 2013

Basics of Clustering and problems

Definition of a Cluster: A cluster is a

set (2+) server nodes dedicated to keep application services alive,

communicating through the cluster software/framework with eachother, test and

probe health status of servernodes/services and with quorum based decisions and

with switchover/failover techniques keep the application services running on

them available. That is, should a node that runs a service unexpectedly lose

functionality/connection, the other ones would take over the and run the

services, so that availability is guaranteed. To provide

availability while strictly sticking to a consistent cluster configuration is

the main goal of a cluster.

At this point we have to add that this defines a

HA-cluster, a High-Availability cluster, where the clusternodes are planned to

run the services in an active-standby, or failover fashion. An

example could be a single instance database. Some applications can be run in a

distributed or scalable fashion. In the latter case

instances of the application run actively on separate clusternodes serving

servicerequests simultaneously. An example for this version could be a

webserver that forwards connection requests to many backend servers in a

round-robin way. Or a database running in active-active RAC setup.

Now, what is a cluster made of? Servers, right.

These servers (the clusternodes) need to communicate. This of course happens

over the network, usually over dedicated network interfaces interconnecting all

the clusternodes. These connection are called interconnects.

How many clusternodes are in a cluster? There are different cluster topologies. The most simple one is a clustered pair topology, involving only two clusternodes:

How many clusternodes are in a cluster? There are different cluster topologies. The most simple one is a clustered pair topology, involving only two clusternodes:

There

are several more topologies, clicking the image above will take you to the

relevant documentation.

Also, to answer the question Solaris Cluster allows you to run up to 16 servers in a cluster.

Also, to answer the question Solaris Cluster allows you to run up to 16 servers in a cluster.

Where shall these clusternodes be

placed? A very important question. The right answer is: It depends on what you

plan to achieve with the cluster. Do you plan to avoid only a server outage?

Then you can place them right next to eachother in the datacenter. Do you need

to avoid DataCenter outage? In that case of course you should place them at

least in different fire zones. Or in two geographically distant DataCenters to

avoid disasters like floods, large-scale fires or power outages. We call this

a stretched- or campus cluster, the

clusternodes being several kilometers away from eachother. To cover really

large distances, you probably need to move to a GeoCluster, which is a

different kind of animal.

There are a number of problems with

clustering. Among them:

- current

clustering techniques do not address all the requirements adequately (and

concurrently);

- dealing

with large number of dimensions and large number of data items can be

problematic because of time complexity;

- the

effectiveness of the method depends on the definition of “distance” (for

distance-based clustering);

- if an obvious distance measure

doesn’t exist we must “define” it, which is not always easy, especially in

multi-dimensional spaces;

- the result of the clustering algorithm (that in many cases can be arbitrary itself) can be interpreted in different ways.

References:

Response - Heat Map in Google Docs

After seeing the tutorial by Gang on creating a heat map in google docs, I decided to see if I could use this to make a visual tool for the percentage of all households that are of same-sex in the U.S. Using the heat map gadget makes it easy to see the intensities of each state. The generated heat maps can be seen below:

2000:

2010:

From these maps it is easy to see that the western and northeastern parts of the United States have darker shaded areas correlating with higher percentages of the population containing same-sex households. Doing some research into these regions, you will find more states consistently liberal with policies advocating same-sex couples. It is also apparent that some of the darker states seem to bleed over to nearby states showing that they potentially influence states around them.

The data can be found at the following: http://www.census.gov/hhes/samesex/

Subscribe to:

Posts (Atom)